<Info | 15 non-empty values

bads: 2 items (MEG 2443, EEG 053)

ch_names: MEG 0113, MEG 0112, MEG 0111, MEG 0122, MEG 0123, MEG 0121, MEG ...

chs: 204 Gradiometers, 102 Magnetometers, 9 Stimulus, 60 EEG, 1 EOG

custom_ref_applied: False

dev_head_t: MEG device -> head transform

dig: 146 items (3 Cardinal, 4 HPI, 61 EEG, 78 Extra)

file_id: 4 items (dict)

highpass: 0.1 Hz

hpi_meas: 1 item (list)

hpi_results: 1 item (list)

lowpass: 40.0 Hz

meas_date: 2002-12-03 19:01:10 UTC

meas_id: 4 items (dict)

nchan: 376

projs: PCA-v1: off, PCA-v2: off, PCA-v3: off, Average EEG reference: off

sfreq: 150.2 Hz

>MNE-Python

MNE-Python Tutorials

- Alexandre Gramfort, Martin Luessi, Eric Larson, Denis A. Engemann, Daniel Strohmeier, Christian Brodbeck, Roman Goj, Mainak Jas, Teon Brooks, Lauri Parkkonen, and Matti S. Hämäläinen. MEG and EEG data analysis with MNE-Python. Frontiers in Neuroscience, 7(267):1–13, 2013. doi:10.3389/fnins.2013.00267.

MNE-Python: Introductory Tutorials

Overview: Setup and Load Data

import os

import numpy as np

import mne

sample_data_folder = mne.datasets.sample.data_path()

sample_data_raw_file = os.path.join(sample_data_folder, 'MEG', 'sample',

'sample_audvis_filt-0-40_raw.fif')

raw = mne.io.read_raw_fif(sample_data_raw_file)

print(raw)Opening raw data file /Users/mears/mne_data/MNE-sample-data/MEG/sample/sample_audvis_filt-0-40_raw.fif... Read a total of 4 projection items: PCA-v1 (1 x 102) idle PCA-v2 (1 x 102) idle PCA-v3 (1 x 102) idle Average EEG reference (1 x 60) idle Range : 6450 ... 48149 = 42.956 ... 320.665 secsReady.<Raw | sample_audvis_filt-0-40_raw.fif, 376 x 41700 (277.7 s), ~3.3 MB, data not loaded>

Overview: Raw Data Class

Overview: Raw Data Class

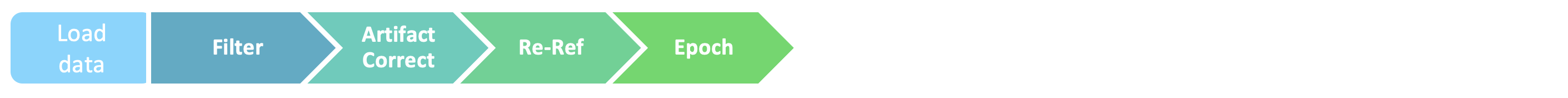

Overview: Preprocessing

- MNE methods

- Filtering

mne.filter.create_filter()mne.filter.notch_filter()raw.resample()

- Artifact Correction

mne.channels.DigMontage()..interpolate_bads()mne.set_eeg_reference()

mne.preprocessing.ICA()mne.preprocessing.regress_artifact()

- Filtering

Alternatives to Artifact Correction

- Autoreject package

- Automatically reject bad trials & repair bad sensors.

- Algorithm estimates threshold for each sensor yielding trial-wise bad sensors

- Trial repair: interpolation/exclusion

- MNE-ICALabel package

- Labeling ICs (from EEGLab)

- 3 input features: image, psd, autocorrelation

Overview: Detecting experimental events

events = mne.find_events(raw, stim_channel='STI 014')

print(events[:10]) # show the first 10

print(events.shape)319 events foundEvent IDs: [ 1 2 3 4 5 32][[6994 0 2]

[7086 0 3]

[7192 0 1]

[7304 0 4]

[7413 0 2]

[7506 0 3]

[7612 0 1]

[7709 0 4]

[7810 0 2]

[7916 0 3]]

(319, 3)Python Tip

Numpy arrays can be sliced and indexed.

Overview: Detecting experimental events

- Python dictionary objects :

- set of key-value pairs

Python Tip

dictionary sets are created by dict() or by ‘{}’ literals

| Event# | Condition |

|---|---|

| 1 | auditory stimulus (tone) to the left ear |

| 2 | auditory stimulus (tone) to the right ear |

| 3 | visual stimulus (checkerboard) to the left visual field |

| 4 | visual stimulus (checkerboard) to the right visual field |

| 5 | smiley face (catch trial) |

| 32 | subject button press |

Overview: Detecting experimental events

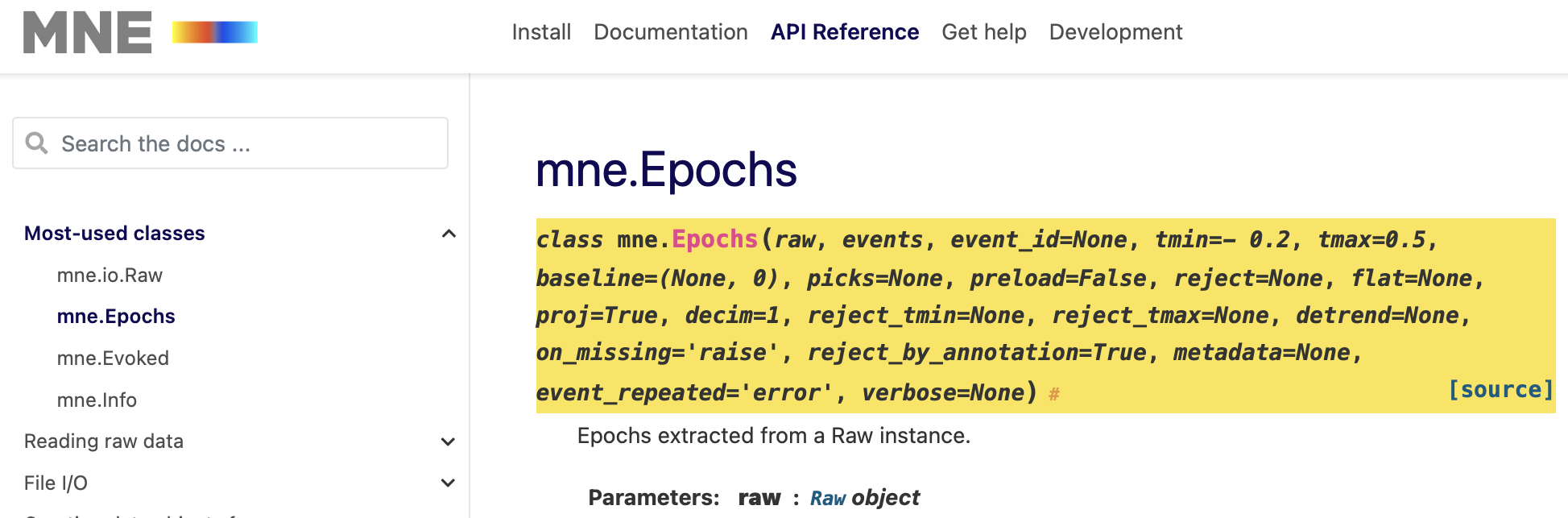

Overview: Epoching continuous data

Overview: Epoching continuous data

Consult the MNE function reference.

Using the correct input format can be challenging in MNE. help(mne.Epochs)

Overview: Epoching continuous data

epochs = mne.Epochs(raw, events, event_id=event_dict, tmin=-0.2, tmax=0.5,

reject=reject_criteria, preload=True)Not setting metadata319 matching events foundSetting baseline interval to [-0.19979521315838786, 0.0] secApplying baseline correction (mode: mean)Created an SSP operator (subspace dimension = 4)4 projection items activatedLoading data for 319 events and 106 original time points ... Rejecting epoch based on EEG : ['EEG 001', 'EEG 002', 'EEG 003', 'EEG 007'] Rejecting epoch based on EOG : ['EOG 061'] Rejecting epoch based on MAG : ['MEG 1711'] Rejecting epoch based on EOG : ['EOG 061'] Rejecting epoch based on EOG : ['EOG 061'] Rejecting epoch based on MAG : ['MEG 1711'] Rejecting epoch based on EEG : ['EEG 008'] Rejecting epoch based on EOG : ['EOG 061'] Rejecting epoch based on EOG : ['EOG 061'] Rejecting epoch based on EOG : ['EOG 061']10 bad epochs dropped

Overview: Epoching continuous data

conds_we_care_about = [

'auditory/left',

'auditory/right',

'visual/left',

'visual/right']

# this operates in-place

epochs.equalize_event_counts(conds_we_care_about)

aud_epochs = epochs['auditory']

vis_epochs = epochs['visual']

del raw, epochs # free up memoryDropped 7 epochs: 121, 195, 258, 271, 273, 274, 275- Finer points for selection of conditions:

- Selecting part of a slashed event_id allows additional flexibility

- Forward-slash operator enables parts of event_id to act independently

- Assigning first part alone (aud or vis) combines both left and right

- Term order doesn’t matter (e.g., for left or right)

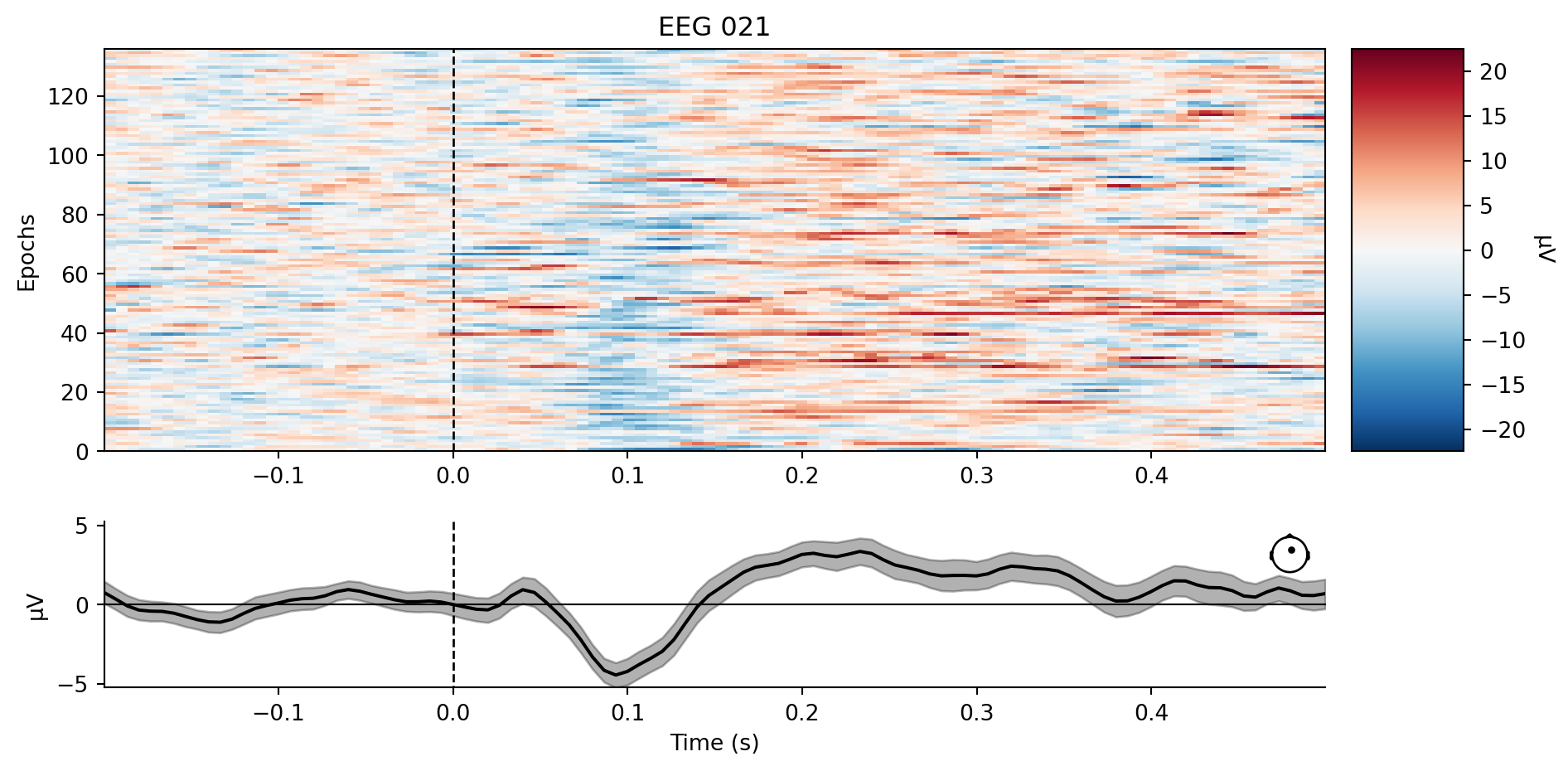

Overview: Epochs Plot-types

- MNE: pick and select

- Pick channels and data types

- Slice and select epochs

- Copy and Crop time epoch segments

Note

It’s important to select the specific data that you want to plot. MNE often will ‘try’ to plot all the data that you throw at it.

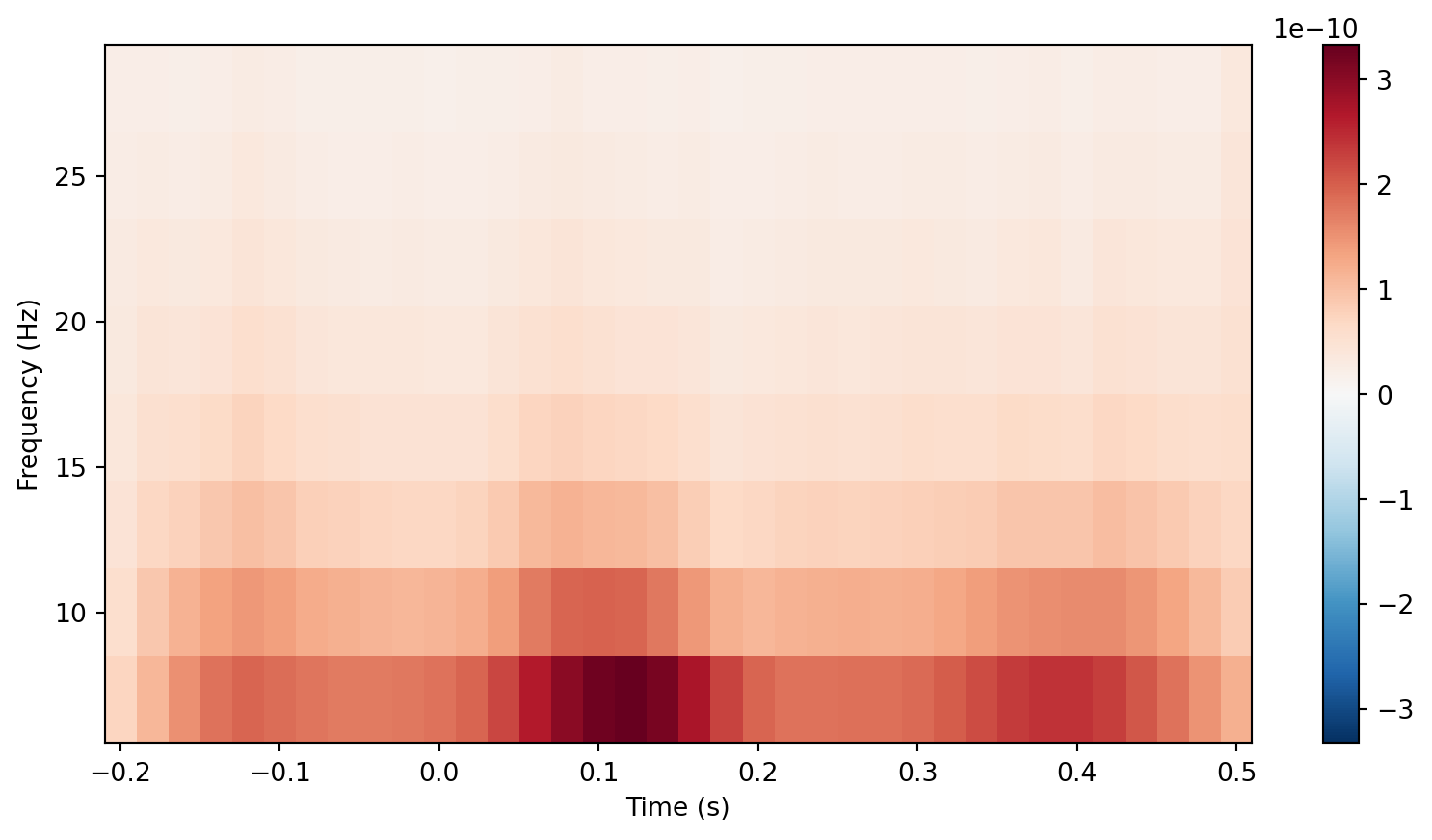

Overview: Time-frequency analysis

Overview: Time-frequency analysis

Removing projector <Projection | PCA-v1, active : True, n_channels : 102>Removing projector <Projection | PCA-v2, active : True, n_channels : 102>Removing projector <Projection | PCA-v3, active : True, n_channels : 102>Removing projector <Projection | PCA-v1, active : True, n_channels : 102>Removing projector <Projection | PCA-v2, active : True, n_channels : 102>Removing projector <Projection | PCA-v3, active : True, n_channels : 102>No baseline correction applied

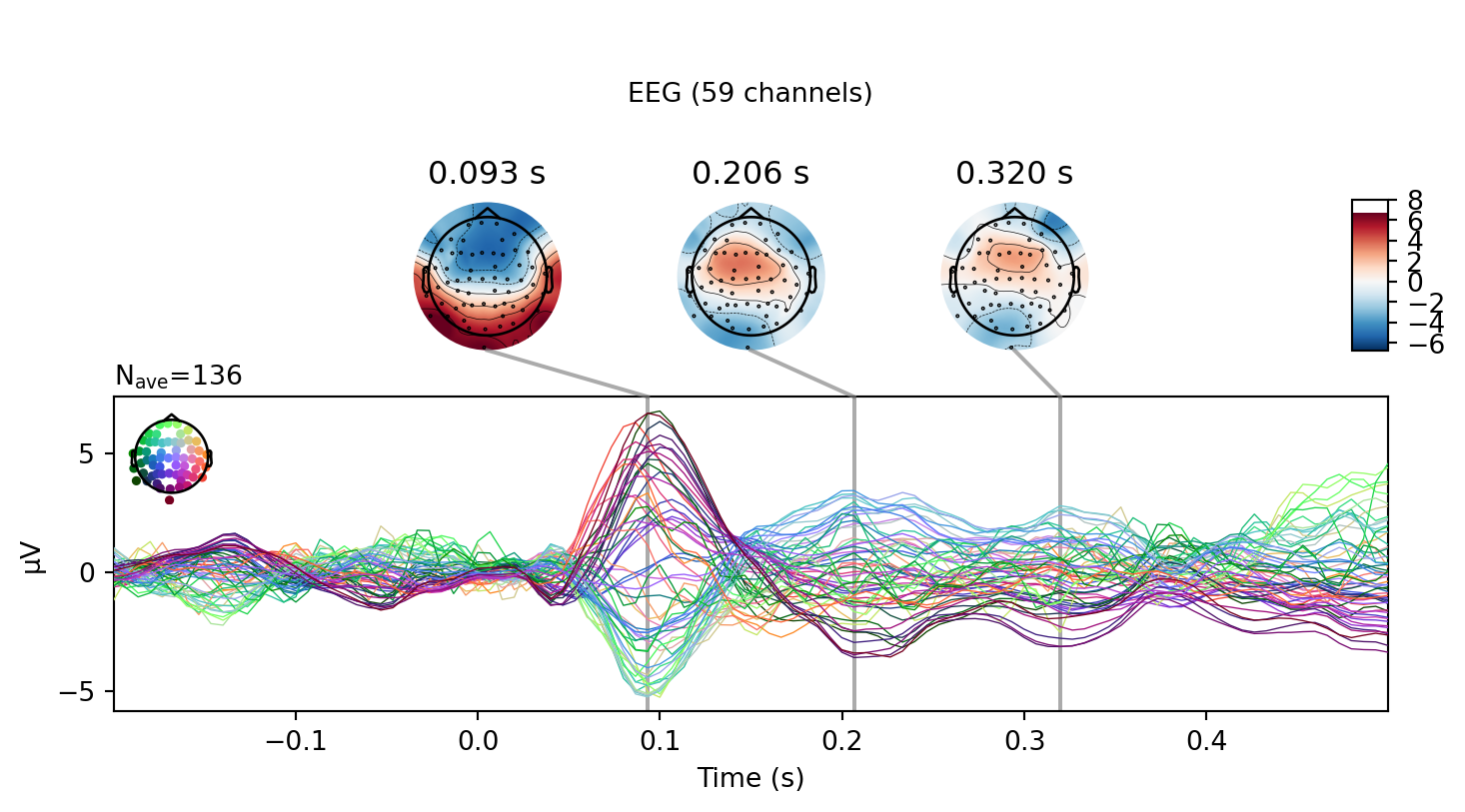

[<Figure size 960x480 with 2 Axes>]Overview: Estimating evoked responses

<Evoked | '0.50 × auditory/left + 0.50 × auditory/right' (average, N=136), -0.1998 – 0.49949 sec, baseline -0.199795 – 0 sec, 366 ch, ~3.6 MB>

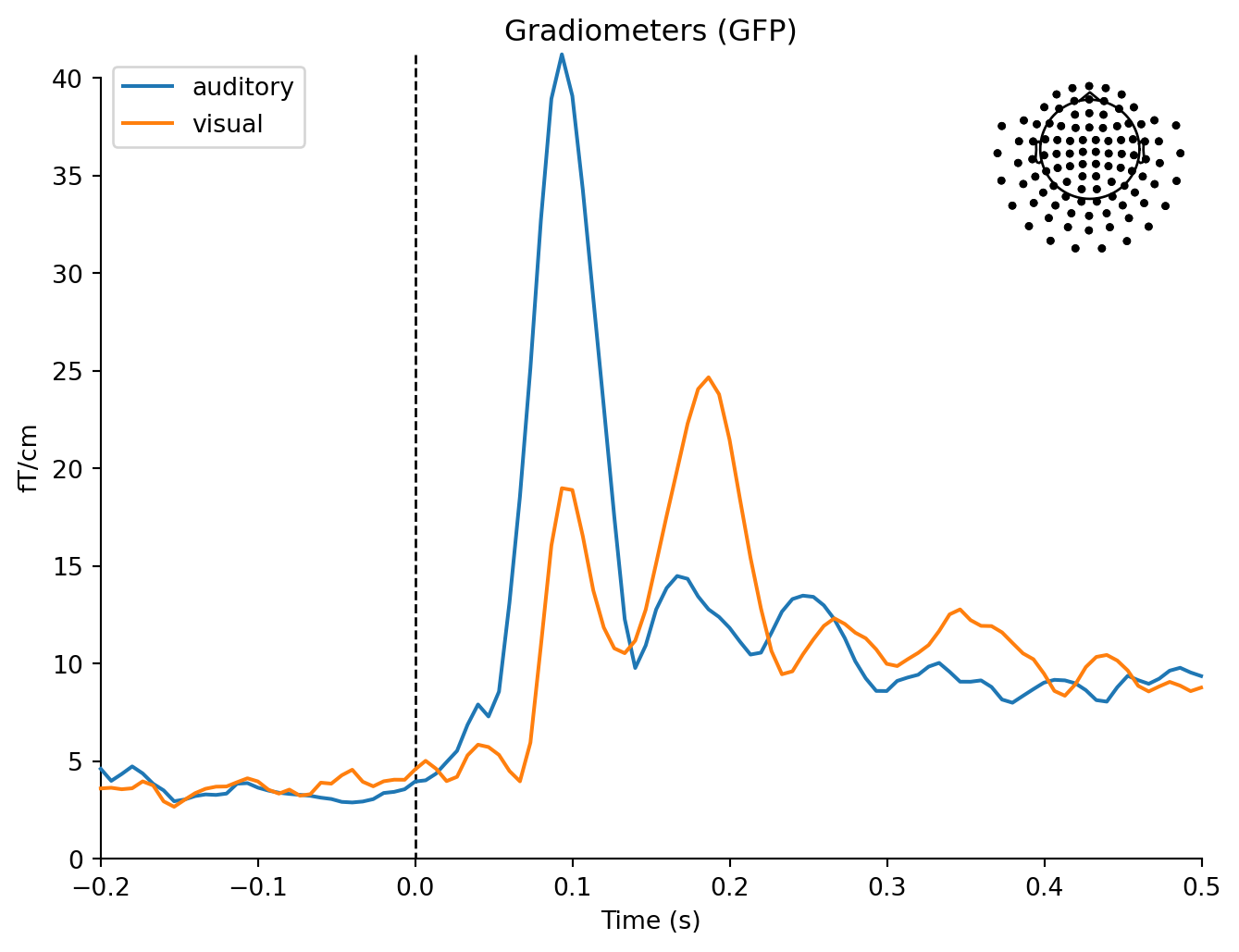

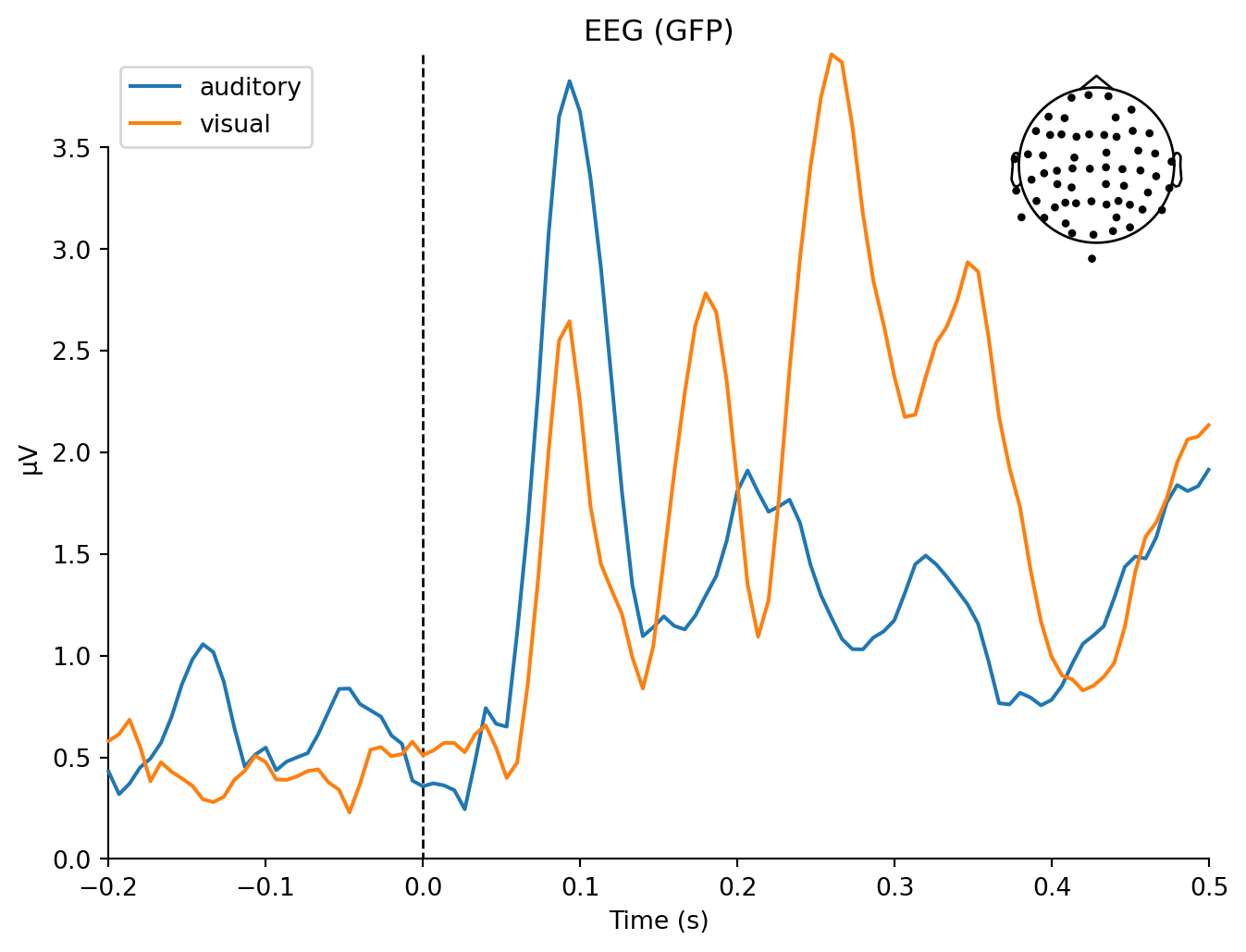

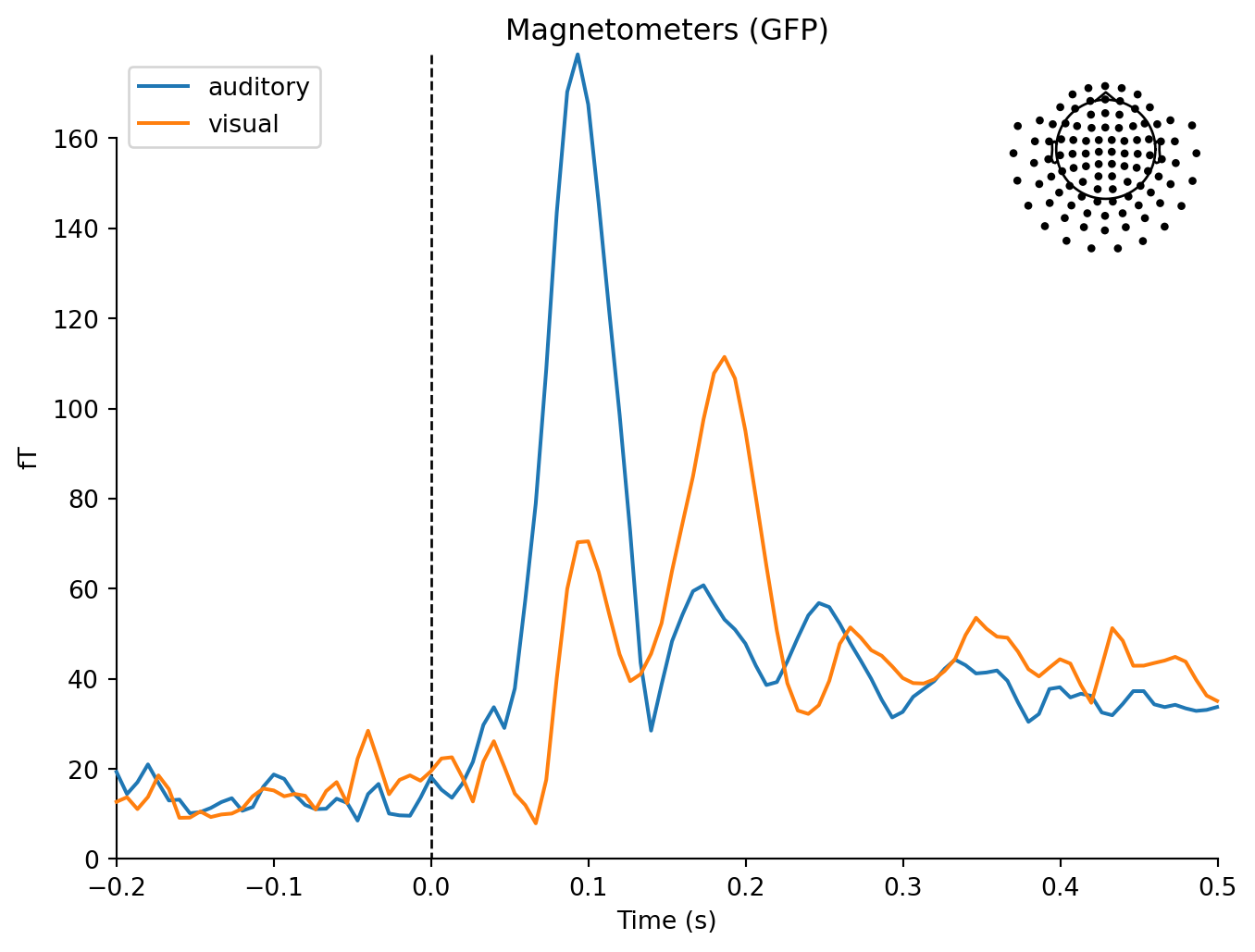

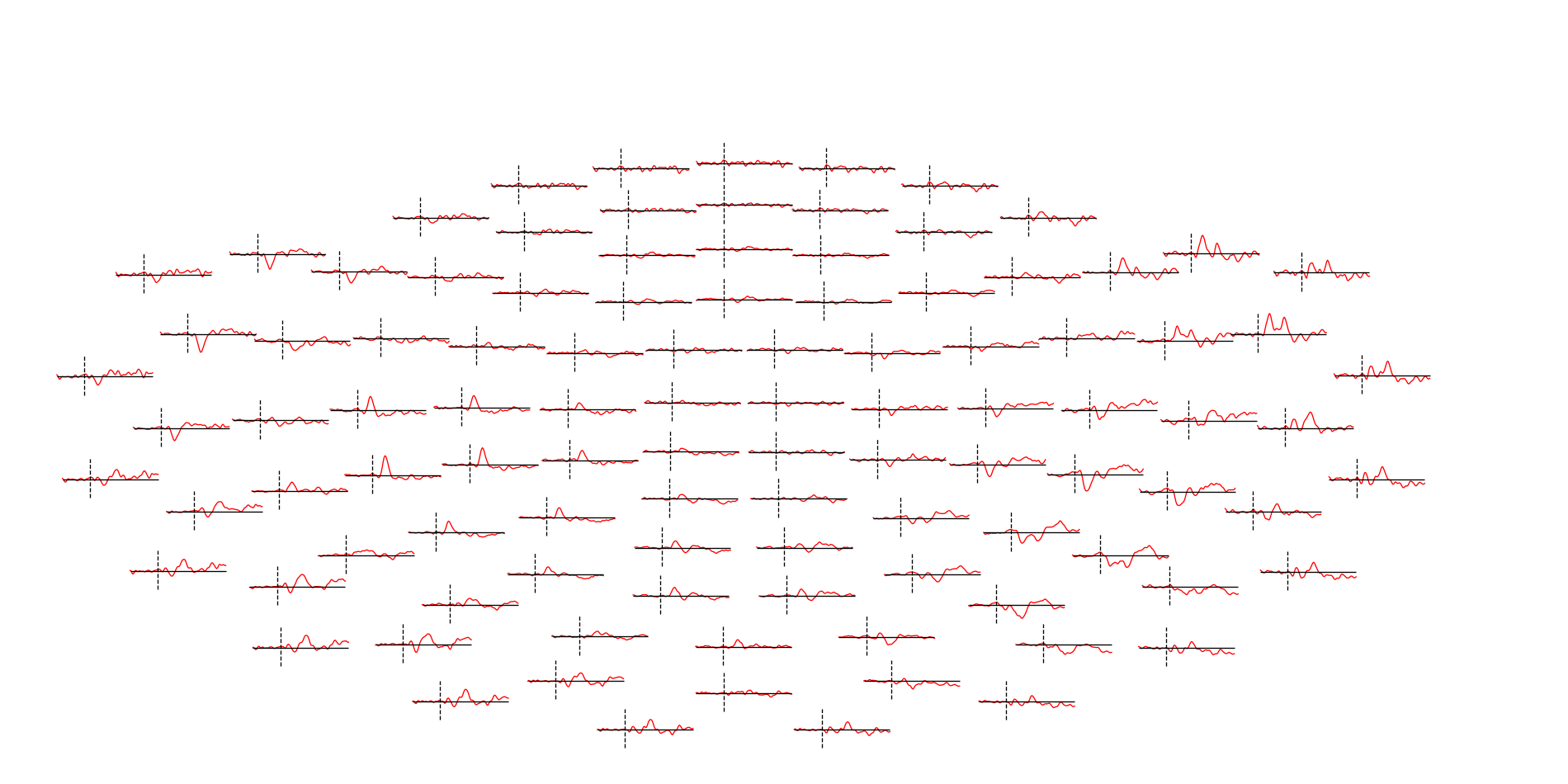

Overview: Estimating evoked responses

mne.viz.plot_compare_evokeds(dict(auditory=aud_evoked, visual=vis_evoked),

legend='upper left', show_sensors='upper right')Multiple channel types selected, returning one figure per type.combining channels using "gfp"combining channels using "gfp"

combining channels using "gfp"combining channels using "gfp"

combining channels using "gfp"combining channels using "gfp"

[<Figure size 768x576 with 2 Axes>,

<Figure size 768x576 with 2 Axes>,

<Figure size 768x576 with 2 Axes>]Overview: Estimating evoked responses

Projections have already been applied. Setting proj attribute to True.Removing projector <Projection | PCA-v1, active : True, n_channels : 102>Removing projector <Projection | PCA-v2, active : True, n_channels : 102>Removing projector <Projection | PCA-v3, active : True, n_channels : 102>

Overview: Estimating evoked responses

Python Tip

Lists are created by list() or by ‘[]’ literals.

Overview: Estimating evoked responses

<Evoked | '(0.50 × auditory/left + 0.50 × auditory/right) - (0.50 × visual/left + 0.50 × visual/right)' (average, N=68.0), -0.1998 – 0.49949 sec, baseline -0.199795 – 0 sec, 366 ch, ~3.6 MB>

Overview: Estimating evoked responses

Removing projector <Projection | Average EEG reference, active : True, n_channels : 60>

Overview

Raw: Working wth Continuous Data

Epochs: Segmenting Data

Evoked: Averaging

Preprocessing Tutorials

MNE-Python Examples

Migrating: EEGLAB to MNE-Python

Input - Output

Installing MNE-Python

Preprocessing Examples

MNE-Python

- classes (CamelCase names)

- functions (underscore_case names)

mappingproxy({'__module__': 'mne.io.fiff.raw',

'__doc__': 'Raw data in FIF format.\n\n Parameters\n ----------\n fname : str | file-like\n The raw filename to load. For files that have automatically been split,\n the split part will be automatically loaded. Filenames not ending with\n ``raw.fif``, ``raw_sss.fif``, ``raw_tsss.fif``, ``_meg.fif``,\n ``_eeg.fif``, or ``_ieeg.fif`` (with or without an optional additional\n ``.gz`` extension) will generate a warning. If a file-like object is\n provided, preloading must be used.\n\n .. versionchanged:: 0.18\n Support for file-like objects.\n allow_maxshield : bool | str (default False)\n If True, allow loading of data that has been recorded with internal\n active compensation (MaxShield). Data recorded with MaxShield should\n generally not be loaded directly, but should first be processed using\n SSS/tSSS to remove the compensation signals that may also affect brain\n activity. Can also be "yes" to load without eliciting a warning.\n \n preload : bool or str (default False)\n Preload data into memory for data manipulation and faster indexing.\n If True, the data will be preloaded into memory (fast, requires\n large amount of memory). If preload is a string, preload is the\n file name of a memory-mapped file which is used to store the data\n on the hard drive (slower, requires less memory).\n \n on_split_missing : str\n Can be ``\'raise\'`` (default) to raise an error, ``\'warn\'`` to emit a\n warning, or ``\'ignore\'`` to ignore when split file is missing.\n \n .. versionadded:: 0.22\n \n verbose : bool | str | int | None\n Control verbosity of the logging output. If ``None``, use the default\n verbosity level. See the :ref:`logging documentation <tut-logging>` and\n :func:`mne.verbose` for details. Should only be passed as a keyword\n argument.\n\n Attributes\n ----------\n \n info : mne.Info\n The :class:`mne.Info` object with information about the sensors and methods of measurement.\n ch_names : list of string\n List of channels\' names.\n n_times : int\n Total number of time points in the raw file.\n times : ndarray\n Time vector in seconds. Starts from 0, independently of `first_samp`\n value. Time interval between consecutive time samples is equal to the\n inverse of the sampling frequency.\n preload : bool\n Indicates whether raw data are in memory.\n \n verbose : bool | str | int | None\n Control verbosity of the logging output. If ``None``, use the default\n verbosity level. See the :ref:`logging documentation <tut-logging>` and\n :func:`mne.verbose` for details. Should only be passed as a keyword\n argument.\n ',

'__init__': <function mne.io.fiff.raw.__init__(self, fname, allow_maxshield=False, preload=False, on_split_missing='raise', verbose=None)>,

'_read_raw_file': <function mne.io.fiff.raw._read_raw_file(self, fname, allow_maxshield, preload, do_check_ext=True, verbose=None)>,

'_dtype': <property at 0x12e97c450>,

'_read_segment_file': <function mne.io.fiff.raw.Raw._read_segment_file(self, data, idx, fi, start, stop, cals, mult)>,

'fix_mag_coil_types': <function mne.io.fiff.raw.Raw.fix_mag_coil_types(self)>,

'acqparser': <property at 0x12e97c590>})The radius of the circle is 10.

| Planet | R (km) | mass (x 10^29 kg) |

|---|---|---|

| Sun | 696000 | 1.9891e+09 |

| Earth | 6371 | 5973.6 |

| Moon | 1737 | 73.5 |

| Mars | 3390 | 641.85 |

Note

Note that there are five types of callouts, including: note, warning, important, tip, and caution.

Pro Tip

This is an example of a callout with a caption.

Important

Important

Danger

Caution

Warning

Warning

Expand To Learn About Collapse

This is an example of a ‘folded’ caution callout that can be expanded by the user. You can use collapse="true" to collapse it by default or collapse="false" to make a collapsible callout that is expanded by default.